A conversation with Stephen Hooper

In this article, we continue the discussion on AI in design and manufacturing software with Stephen Hooper, Vice President of Software Engineering for Autodesk’s Design and Manufacturing Division. Part two can be found here.

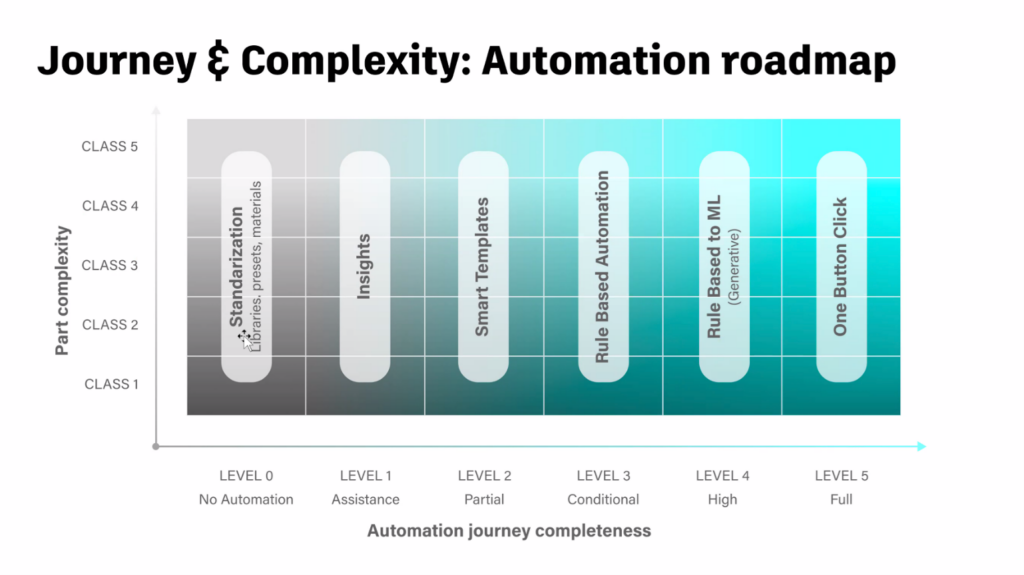

Engineering.com: I expected levels of automation for different design software AIs – such as SAE levels classifying autonomous driving capabilities and level 5 indicating full autonomy. Design software with such capabilities would receive a prompt like “Hey AI, design a car” and would design and build a car. Level 0 is where we are now. We design and build everything. The geometry is a little intelligent, but mostly dumb. There are lots of levels in between. The first level could be what Mike Haley from Autodesk talked about – a natural language user interface. That could be the low hanging fruit. That would remove the dependency on traditional icon-based menu-based systems.

Hooper: Some vendors say, and some startups have tried this. You’ll see a lot of these new startups where maybe this text-based input results in a skateboard. It’s a little naive to think we could do much more for a number of reasons. Let’s take 2D graphics as an example. Let’s say I write a prompt that creates an image of a dimly lit nighttime street scene in San Francisco. It’s a side street with neon lights, and on the sidewalk there’s a car parked at the curb. AI: Create this image for me. It will create this exact image for you. The problem is that the big language model can get the same prompt three times and produce three different results. With a specific idea in mind, you need to start expanding the prompt. You need to say, “I want a green neon sign, and I want the green neon sign to say ‘Al’s Bar,’ and I want ‘Al’s Bar’ to be on the right side of the image six feet off the ground.” And the car should be a Chevy pickup truck. And it should be red. The problem is that to get accurate output, the prompt is going to be so big and take so long to define that you might as well create the image manually. This is true for parametric methods as well. For example, if I draw a flat plate that’s 200 mils by 400 mils and has six evenly spaced holes in the center, and I want to drill through those evenly spaced holes with a diameter of six mils, it’s almost faster for me to draw a rectangle, put the holes in, and dimension it. I think a purely text-based product that provides a complete product definition is highly unlikely. I expect we’ll move toward what’s called a multimodal prompt, where you can provide an equation for the performance characteristics of the product. An engineer might provide some hand sketches, a short text description, and a table with some of the standard parts to use. I would call that a multimodal prompt package. You would give something back to an AI that can accept multimodal input. This gives rise to a number of options that can be interacted with, manipulated and procedurally refined to arrive at the target result. There may be some things that can be produced purely from a prompt – for example, an M5 screw with a pitch of 1.5. But achieving product definition will be much more difficult.

Engineering.com: There may be certain things I’m used to doing, certain shapes I’m used to using, or certain components. What if the AI could anticipate them? Let’s say I’m a bicycle designer and I usually use round tubes. Could the AI recognize from the line I draw that it’s going to be a tube and start drawing a tube? Can it use the shapes I’m familiar with? I would call that more of a design assist than fully automated design.

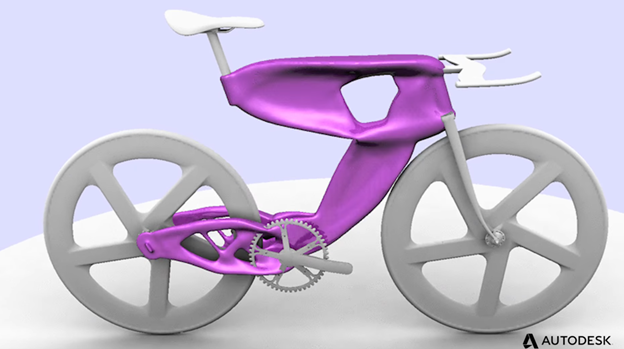

Hooper: I think right now people think it’s static and asynchronous. I think to be really useful it needs to be interactive and synchronous. With the bicycle example, you can draw a layout sketch and it will show you 16 options. You might say, “I like this option.” It doesn’t at the moment, so I’ll tweak it a little bit and then it will come back and say, “Okay, based on the tweaks I’m going to tweak it so you can make it with carbon fiber in a mold.”

Engineering.com: That’s the reason for my frustration with what’s been provided so far. We’re engineers and we’ve been given generative design. Generative design starts from scratch and gives us, excuse the term, garbage geometry. An experienced bicycle designer would want to start with a tubular design. A structural engineer might want to model with I-beams. Not lumps. We’re not going to use that.

Hooper: There will be some elements that are deterministic and others that can be created. The cross sections for steel structures will be 100% deterministic. It could be a 50x50x2.5 box girder section or an action or W-150 I-beam. Those will be deterministic. Then again we’ll have this multimodal input. You could tell the system, “Here are the different types of steel elements I want to use.” Then you could give it a rough sketch to say, “I want a structure that’s three meters tall in this format.” It will take the sketch and the list of standard content you want to use and create the structure for you.

Engineering.com: That’s what I would call design assist. It takes shapes and parts that I’m comfortable with and that I’ve already recognized as optimal or standard and starts using those things. If I’m building a wall, I don’t want to have to draw the 2×4′ boards. If I’m building a commercial building, I don’t want to have to draw I-beams. I don’t want to use blobs. Let me use round tubes. AI can help me figure out where the joints between the round tubes should be. What is the optimal configuration of the round tubes for maximum strength and minimum weight?

By the way, no one has taken up my bike challenge and designed a bike frame that is better than the standard diamond shape made of tubes. Excuse my impatience, Stephen. I know the guys are trying hard. They put a lot of stuff into the CAD software. I say after part of the house has been redesigned, “It looks great, but what about the rest? Why can’t we do that?” Frankly, I love that Autodesk doesn’t make me annotated drawings. That’s great.

Hooper: Point to layers. I would suggest layers that come after that. The layer that comes after that would be multidisciplinary. Now you look at a 3D model or someone using Cadence looks at a circuit board. There are different AIs and different disciplines. An AI that can go into a multidisciplinary model would be ideal. Beyond that, into systems architecture. Now I can generatively build a systems architecture for a product. Then I don’t have to do a detailed design. I’ll look at the interaction. I’ll have a black box for the software – a black box for the transmission, the suspension, another black box for the electronics. We can build the systems architecture generatively and then at the next level of systems architecture we’ll be able to generatively build the actual details in each of the disciplines. Then I think we’ll get to a generative AI design platform.

Engineering.com: Okay, but don’t talk nonsense to me.

Hooper: I agree – not generative design. Just in the sense of historical generative design, a generative AI platform for design.

Engineering.com: This annotation element and the previously mentioned CNC AI sound excellent.

Hooper: At level 1 we perform a design review and at level 2 we eliminate the tasks that do not add value.

Engineering.com: To remove what we don’t want to deal with – because engineers hate annotating.

Hooper: Level three is design support; level four is multidisciplinary; level 5 is system level and architecture; level 6 is complete product definition.

Engineering.com: I will try to set those levels. I will share them with you. We have heard from companies that they have AI and I think how much? A standard with levels would allow everyone to know whether they are at level one or two.

Hooper: We are also secretive because there may be things we are working on that we don’t want to talk about.

Engineering.com: That’s what I thought, but I’ve been told that Fusion 360 has automatic annotations. Is that public knowledge?

Hooper: The annotations in Fusion will be available in the product soon. That’s public, but there may be other things we’re working on with Mike Haley that are secret.